OpenAI has once again pushed the boundaries of artificial intelligence by introducing the o1 series, a new generation of AI models built specifically to excel at complex problem-solving in fields like science, coding, and mathematics. This launch represents a significant leap forward in AI reasoning capabilities, marking a shift from rapid response models to those that take time to think deeply (that what you observe when it takes several minutes to provide the solution) before answering, much like a human expert would. With enhanced reasoning, o1 outperforms previous models in a variety of tasks, and it’s set to change how developers, researchers, and other professionals interact with AI.

Impressive Performance Metrics

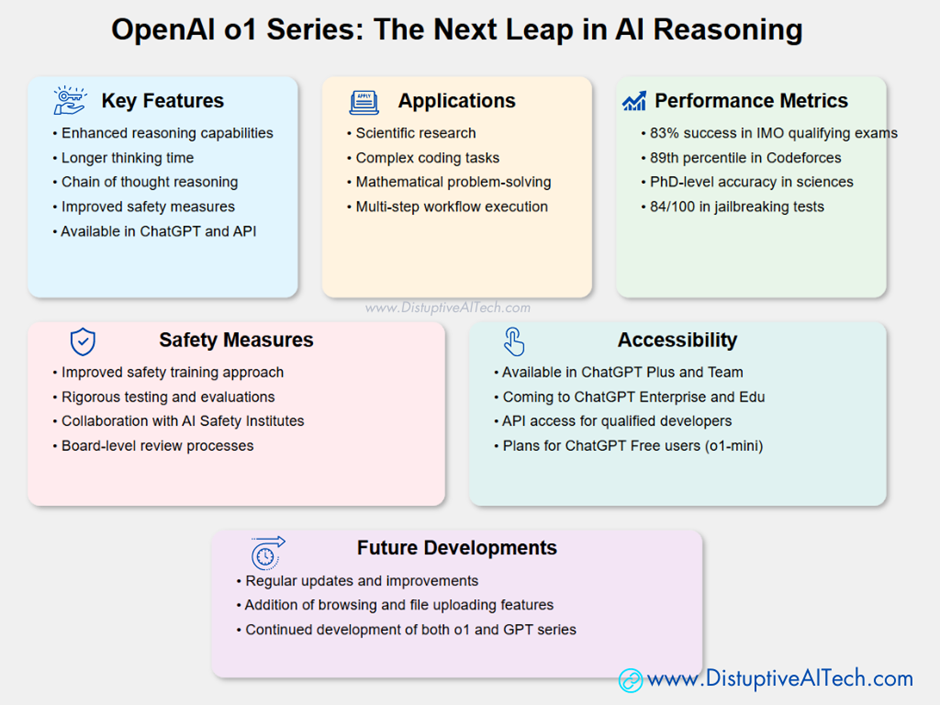

The capabilities of the o1 series are truly remarkable:

1. In International Mathematics Olympiad (IMO) qualifying exams, o1 achieved an 83% success rate, compared to GPT-4o’s 13%.

2. The model reached the 89th percentile in Codeforces coding competitions.

3. O1 demonstrated PhD-level accuracy in physics, chemistry, and biology problems.

The Power of Deep Thinking: What Sets o1 Apart?

The o1 series is designed to spend more time thinking through problems, making it more effective in tackling multi-step and complex tasks. This process mimics how humans’ approach difficult problems—by pausing, reflecting, and then breaking the problem down into manageable steps. Through a method called chain of thought reasoning, the o1 model refines its problem-solving strategies during its training and recognizes mistakes, improving with each iteration. In essence, it learns how to think, not just how to respond.

In benchmark comparisons, o1 has proven to be a dramatic improvement over previous model like GPT-4o. For example, in the International Mathematics Olympiad (IMO) qualifying exam, the older GPT-4o model solved only 13% of the problems. In contrast, the o1 model successfully solved 83% of them, a remarkable achievement that demonstrates its superior reasoning capability. Additionally, in competitive programming environments such as Codeforces, where AI models are tested against human programmers, the o1 model ranked in the 89th percentile, significantly outperforming previous models and many human competitors.

The o1 series isn’t just a slight enhancement—it represents a profound leap forward in AI’s ability to reason. This leap comes from the model’s ability to break down complex tasks into simpler, smaller steps, much like how a PhD-level student would solve advanced problems in physics or chemistry. As a result, o1 is particularly well-suited for fields requiring complex reasoning, such as scientific research, coding, and mathematical modelling.

Safety and Alignment: Building Trustworthy AI

While improving reasoning abilities, OpenAI has also made significant advancements in the safety of the o1 series. AI safety has been a critical focus, particularly as models become more capable and are applied in sensitive or high-stakes environments. OpenAI has introduced a new safety training approach that leverages the reasoning capabilities of o1 to ensure better adherence to safety guidelines and ethical principles.

One major concern in AI safety is jailbreaking, where users attempt to trick AI models into bypassing their built-in safety rules. In rigorous testing, the GPT-4o model scored a concerning 22 out of 100 in resisting these attempts. By contrast, the new o1-preview model scored an impressive 84 out of 100, demonstrating far better compliance with safety protocols. This improvement is a direct result of the model’s ability to reason about its own safety rules and apply them contextually, rather than just following hardcoded guidelines.

OpenAI’s safety efforts don’t stop at training models to adhere to ethical standards. They’ve also established formal partnerships with AI Safety Institutes in the U.S. and U.K., giving these organizations early access to a research version of the o1 model. These partnerships allow for third-party evaluations and testing, helping to ensure that the models are robust and reliable before they are released to the public. This collaboration is part of OpenAI’s broader Preparedness Framework, a system designed to ensure AI models are thoroughly evaluated for safety risks before they become widely available.

Who Should Use OpenAI o1?

The o1 series is poised to be incredibly useful for anyone working on complex problem-solving tasks, particularly in fields such as science, coding, mathematics, and engineering. Researchers in healthcare can use o1 to annotate cell sequencing data, making it easier to sift through vast amounts of biological information. Physicists, too, will benefit from the model’s ability to generate complex mathematical formulas required for cutting-edge research in fields like quantum optics.

Developers, in particular, will find the o1 model to be a game-changer. The series excels at coding and debugging, helping developers build multi-step workflows that require advanced reasoning. OpenAI is also introducing a smaller, more cost-effective version of the model called o1-mini, which is 80% cheaper than the o1-preview model. Although it has slightly fewer capabilities, it is designed to be faster and more efficient, making it ideal for tasks that require strong reasoning but don’t need extensive world knowledge. This balance of power and efficiency makes o1-mini perfect for developers looking to create or optimize code without breaking the bank.

Revolutionizing AI Reasoning: Learning to Think

The most revolutionary aspect of the o1 model is its ability to think in a structured, logical manner, much like a human expert. Through its chain of thought reasoning, the model can pause, analyze the steps it’s taken, and adjust its approach if it finds that something isn’t working. This ability to learn from its mistakes and refine its strategies sets o1 apart from other AI models, which typically generate responses based on patterns rather than deep reasoning.

In fact, OpenAI has demonstrated that o1 can perform better than human experts in some cases. For example, in the 2024 American Invitational Mathematics Examination (AIME), o1 solved 74% of the problems on its first attempt, with that score improving to 93% after re-ranking 1000 samples with a learned scoring function. This placed the o1 model among the top 500 students nationwide, an extraordinary result for an AI system.

Conclusion: A New Chapter in AI

The introduction of the o1 series marks a pivotal moment in AI development. By focusing on reasoning, OpenAI has created models that are not only more powerful but also safer and more reliable. The potential applications are vast, from scientific research to software development, and as OpenAI continues to improve these models, we can expect them to unlock entirely new possibilities.

With the o1 series, OpenAI has set the stage for the next chapter of AI innovation, one where machines don’t just mimic human thought—they learn to think like humans. As developers, researchers, and professionals start using these models, the future of AI looks brighter and more capable than ever.

1 Comment

OpenAI O3 Breakthrough: Bridging AI and AGI with ARC and Deliberative Alignment · December 23, 2024 at 12:15 pm

[…] Introducing OpenAI o1: A New Era in AI Reasoning […]

Comments are closed.