“The more powerful the tool, the greater the benefit or damage it can cause” – Brad Smith, Chief Legal Officer, Microsoft

Artificial Intelligence is not new. Since 1950’s, researchers are working hard to make machines work like humans. But this decade has seen growing AI with lightening speed. Artificial intelligence has the potential to drive strong impact across the organizations as well as our society, whether it is towards a more sustainable world, enough food production for a growing population as well as including accessibility for people who are disabled. The pace at which artificial intelligence (AI) is advancing is remarkable. Also, it must be developed and used in way that ensures the trust of the people.

This impact also raises a host of complex and challenging questions about the future because the question is not just what AI can do, but what AI should do.

What is responsible AI?

According to IBM “Responsible artificial intelligence (AI) is a set of principles that help guide the design, development, deployment and use of AI—building trust in AI solutions”

This definition is talking about the principles. Any AI system is said to be Responsible AI, if it follows all the principles. So, what are those principle?

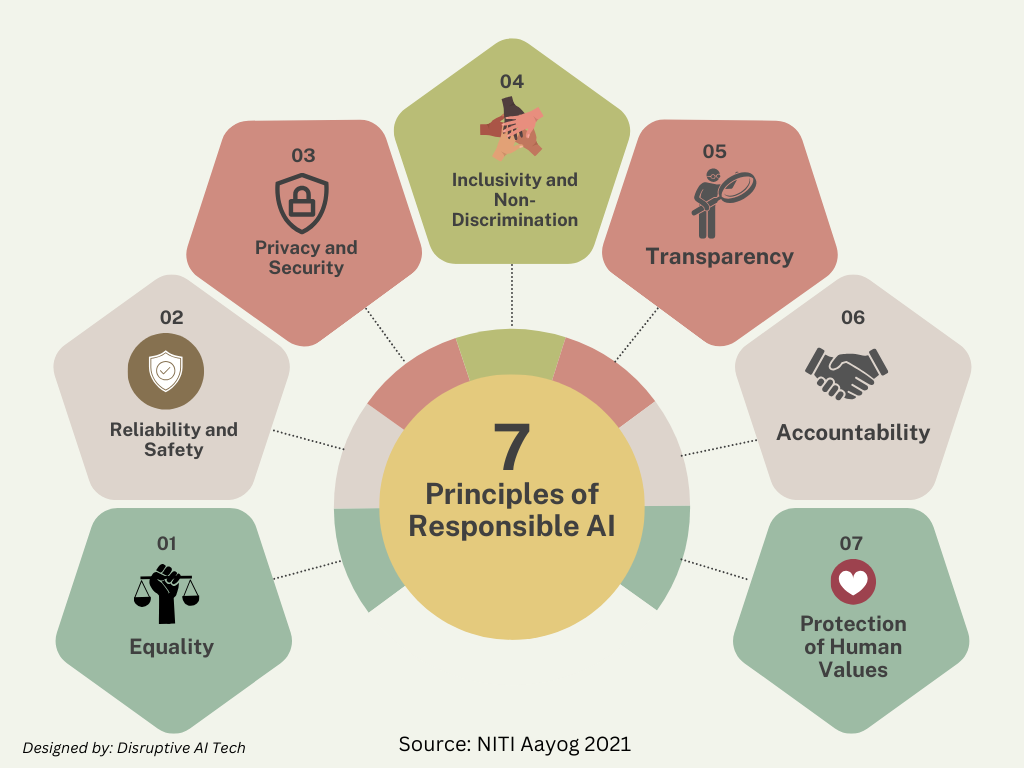

India’s NITI Aayog proposed the seven principles of Responsible AI. Most of these principles are common to other countries guidelines. Here are those principles.

#1, Principle of Equality

One of most famous documentaries on Netflix is ‘Coded Biases’, this talks about how Blacks are not being recognized by the AI system as most of its training data is coming from Whites. This violates the principle of equality.

The principle of equality says that AI systems must treat individuals under the same circumstances relevant to a decision equally. It’s the responsibility of the AI system creator to check how do they ensure that people are treated equally under the same circumstances? Question to ask; whether my training data covers the diversity, how to treat minority population in the dataset? How they can be represented and so on.

#2, Principle of Reliability and Safety

AI systems should operate reliably, safely, and consistently under normal circumstances, as well as under unexpected conditions.

For ex. The autonomous system for mining process is expected to behave reliably, safely, and consistently under normal conditions as well as under unexpected situations, which means rigorous and varied testing is required to ensure reliability and safety of AI systems.

#3, Principle of Privacy and Security

This is the core principle of Responsible AI. AI systems should be developed in a way such that they should be secure and respect privacy of an individual. In the case of Internet of Things (IoT), they provide customized solution leveraging user’s data but on the other hand they should not misuse the data collected from the user. Home cleaning bots know the layout of our house making is potentially dangerous in case of data theft. Another example could be the technology of facial recognition where balance must be maintained between law enforcement vs privacy and security. If system flags someone as criminal, then system itself should capture the false positives and avoid potential harm to someone.

#4, Principle of inclusivity and non-discrimination

See this article from Nature.com (click here) “Millions of black people affected by racial bias in health-care algorithms”. An algorithm widely used in US hospitals to allocate health care to patients has been systematically discriminating against black people. The model was trained mostly on white people ignoring the blacks.

Exclusion happens when AI system solves problem on the basis on any human biases. People with minor population or other disabilities will find it difficult to fully participate if AI is not inclusive.

#5, Principle of Transparency

The outputs of the AI system should be explainable.

No other example than the game between Lee Sudol and AlphaGo can explain this better (click here to see this video). It was ‘move 37’ that surprised even the experts. It was beyond human understanding. The AI playing against Lee Sudol, a famous GO champion, made move 37 which can’t be explained. It was just a game so nobody bothered much but we can’t afford this to happen in Healthcare. Hence this principle must be followed during the lifecycle of AI development.

#6, Principle of Accountability

Who to sue when Robot Loses Your Fortune? One who develop, marketers or the Robot?

There is a case where a Hong Kong-based investor had his own money that was being managed by a London-based investment firm, and the system was developed by an Austria- based company which worked by scanning through online resource or online sources like real-time news and social media and made some predictions on US stocks. But the system began to regularly loose money, including loss of $20 million in a single day. Now the investor decided to sue the investment firm that was managing these investments, even though they were using the AI, they were not necessarily the ones who had created it. And he decided to sue for allegedly exaggerating the capabilities of the AI system.

The AI system must follow the principle of accountability. Who is going to be accountable if system does not work as expected.

#7, Principle of Protection of Human Values

AI should promote positive human values and not disturb in any way social harmony in community relationship.

Stephen Hawking once said, “The development of full artificial intelligence could spell the end of the human race.” Elon Musk claims that AI is humanity’s “biggest existential threat.” Its immediate clear that how nuclear bombs can wipe off the whole humanity. No need to explain how dangerous it can be. But its not in the case of AI, AI could pose an existential risk to humanity is more complicated and harder to grasp.

Infamous case of Cambridge Analytica unearths the potential risk how human values can be put on stake if a few people cross the boundaries of AI applications. This scam manipulated the human values by influencing elections in Nigeria, Kenya, Czechs Republic, and India. They used social media to manipulate target population to behave in the way they want by spreading sensitive posts.

These principles provide the framework on how does one develop the AI systems considering Responsible AI and help answer the following four questions?

One, what tools and techniques can be used to practice responsible AI?

Two, what process and practices need to be followed?

Three, how governments and other stakeholders must consider to propagate responsible AI?

Four, what policies, standards and regulations should be implemented so that ‘Responsible AI’ can be ensured.

Read below second article to learn about Implementing responsible AI!

References

Lectures from Dr Rohini Srivathsa (CTO, Microsoft India)https://www.ibm.com/topics/responsible-ai

https://iapp.org/news/a/privacy-and-responsible-ai/

https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

1 Comment

Implementing Responsible AI: A Practical Guide from Principles to Real-World Application · August 4, 2024 at 1:38 pm

[…] first part of Responsible AI we understood 7 principles of AI (Click here to read first article) which will act as guiding principles in formulating policies, developing application and […]

Comments are closed.