Implementing Responsible AI: A Practical Guide from Principles to Real-World Application

In first part of Responsible AI we understood 7 principles of AI (Click here to read first article) which will act as guiding principles in formulating policies, developing application and responsible use. First part answers two question WHY we need responsible AI and WHAT is responsible AI.

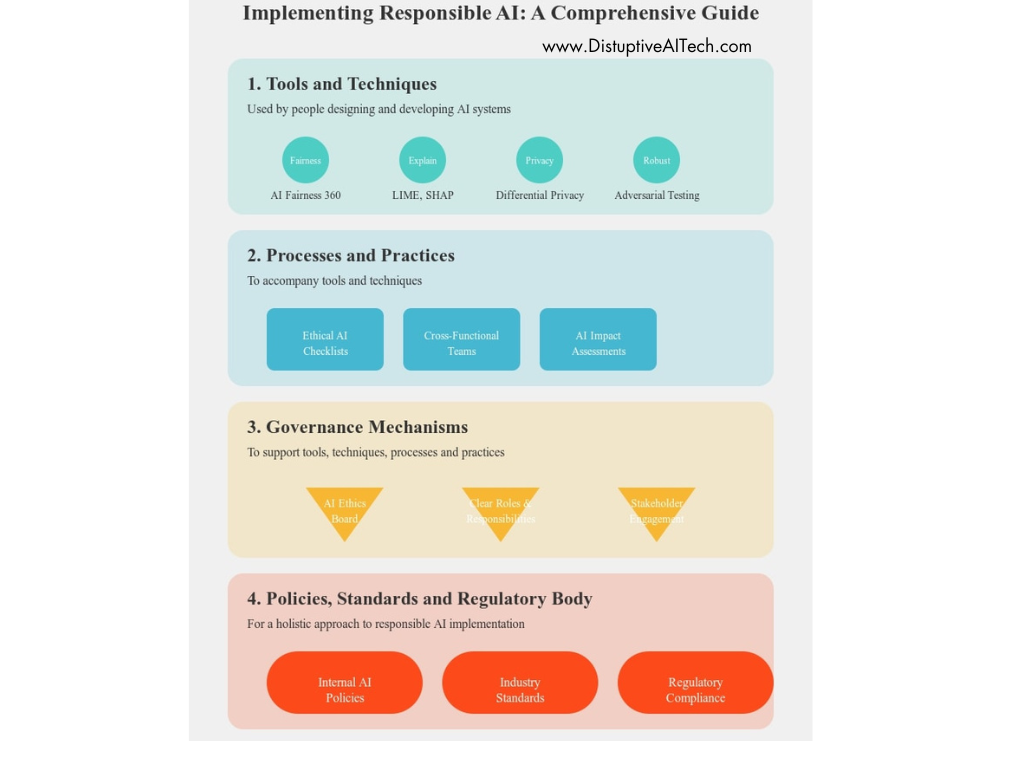

This article is about HOW ‘responsible AI’ can be ensured. How does one take responsible AI from principles to practice? Principles to Practice’ takes a multi-fold approach.

- Tools and techniques: are required to be used by people who are designing, developing AI systems

- Processes and practices: to accompany tools and techniques.

- Governance mechanisms and stakeholder management: to support the tools, techniques, processes and practices.

- Policies, standards and regulatory body: interventions are required to really have a holistic approach to bring responsible AI principles into practice.

Tools and techniques:

Putting responsible AI principles into practice needs tools to help understand, protect and control AI systems across the lifecycle. At the stage where we are assessing a new responsible AI situation in an AI system, that means assessment of the product or technology or solution at the stage when technology is being developed as well as when it is deployed.

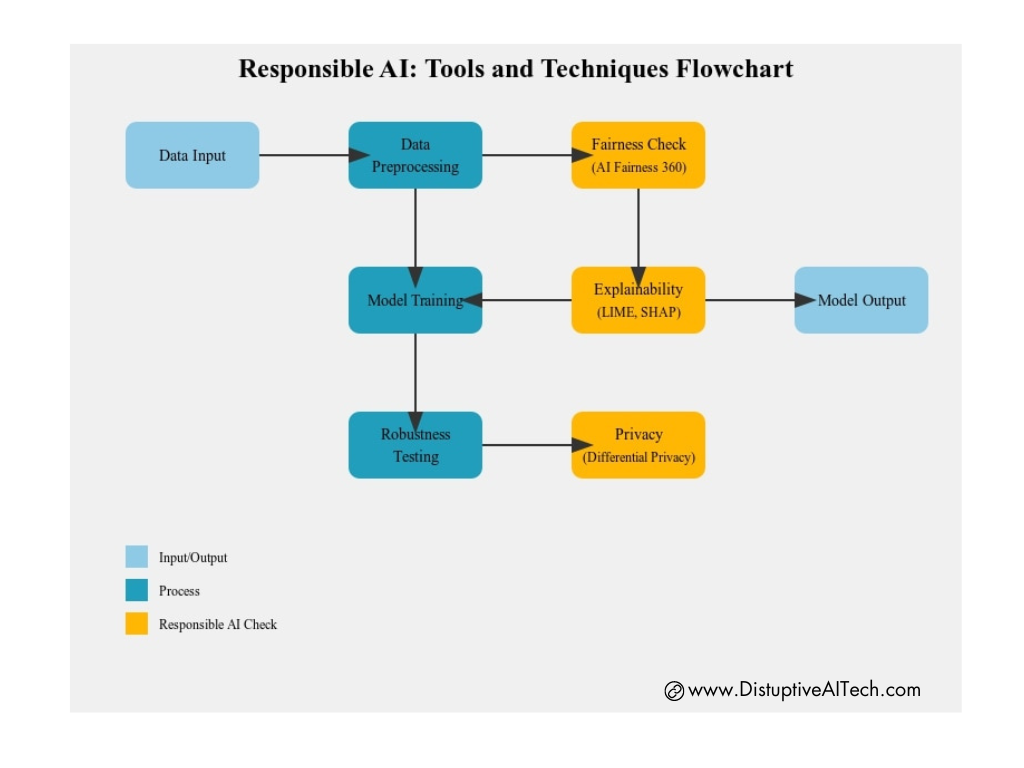

Broadly, tools and techniques are required at three stages in the development. First, at the time of data processing, utmost importance should be given to the fairness of the data and tools like AI Fairness 360 can be used. Second, model are infamous for being unexplainable or its nature of black box. More complex the model is, more is its inexplicability. In such case tools like LIME and SHAP can help providing the explainability of the models to some extent (read more about them in this article ). Third, the model should be robust enough to handle situation like privacy of the customers or any entity. Differential Privacy technique can prove to be game-changer to ensure privacy. It adds a carefully tuned amount of statistical noise to sensitive data so, it then helps to protect that data used in AI systems by preventing re-identification (read more about this on MIT’s article, click here) Below graphic helps in understanding it better.

Processes and practices:

We are aware to the fact that there are guidelines that are required because not every aspect of responsible AI is yet ready to be implemented as an automated tool. So, it’s important that there are guidelines and checklists that give developers concrete steps to ensure that they are developing with responsibility. There are technology-specific guidelines because AI systems have very different purposes and operations and contexts. This can be achieved via three approach.

- Guidelines and Checklists: these provides the guidance materials for modelling, detecting and mitigating security risks and ethics issues throughout the AI product lifecycle. Example, AI fairness checklist helps prioritise fairness when developing AI, operationalises the concepts, provides checklists and structure for improving ad- hoc processes and empowering advocates of fairness.

- Cross-functional teams: cross functional teams need to collaborate to ensure all the principles of the responsible AI are being practices across teams.

- AI Impact Assessment: Complete guide of AI impact assessment is provided by Microsoft in June 2022 (click here for complete report). It provides the detailed steps for evaluating AI system such as defining system profiles, intended uses, potential harms, and mitigations, with a focus on fairness, transparency, and accountability.

Governance and Mechanisms and stakeholder management:

Governance and stakeholder engagement needs to be part of a holistic approach. While an organisation is establishing a governance system that is tailored to that organisation’s unique characteristics, culture, ethical principles and level of engagement of the AI, it is also important that organisations and individuals engage externally across your industry and society. This will take all of us to work together to maximise AI’s potential for positive change.

With the principles being in place, these governance systems are then tasked with the objective to make sure that policies are developed and implemented, standards are created, best practises are developed and we create that culture of integrity by providing the advice on ethical concerns and providing awareness and education to employees.

There are many approaches to governance, and organizations will have to define what might be the best approach for them. Here are three most common approaches,

- Chief ethics officer: this person is a centralised decision making and helps the organisation quickly develop policies around ethics and ensures accountability for each decision however, this is difficult to scale many times.

- Ethics office: It involves forming a dedicated ethics team from different parts of the company. The dedicated team members working at all levels of the organisation, an ethics office can be effective in ensuring that the policies and processes are followed in a meaningful way by all employees and at every stage of AI engagement

- Ethics committee: It brings in diverse group of people, both from inside and outside the organization, which could have experts and senior leaders from the community. They are not necessarily members who are dedicated solely to ethics but work across different groups and can help in terms of developing and implementing policies. This type of governance model provides an organization with perspectives from people with a very wide range of backgrounds and expertise, as well as from unbiased opinions potentially from external members.

Policies standards and regulatory body:

This is an area that is fast evolving, and governments and bodies around the world are deliberating on this emerging technology and how it needs to be addressed. What policies, what standards are required?

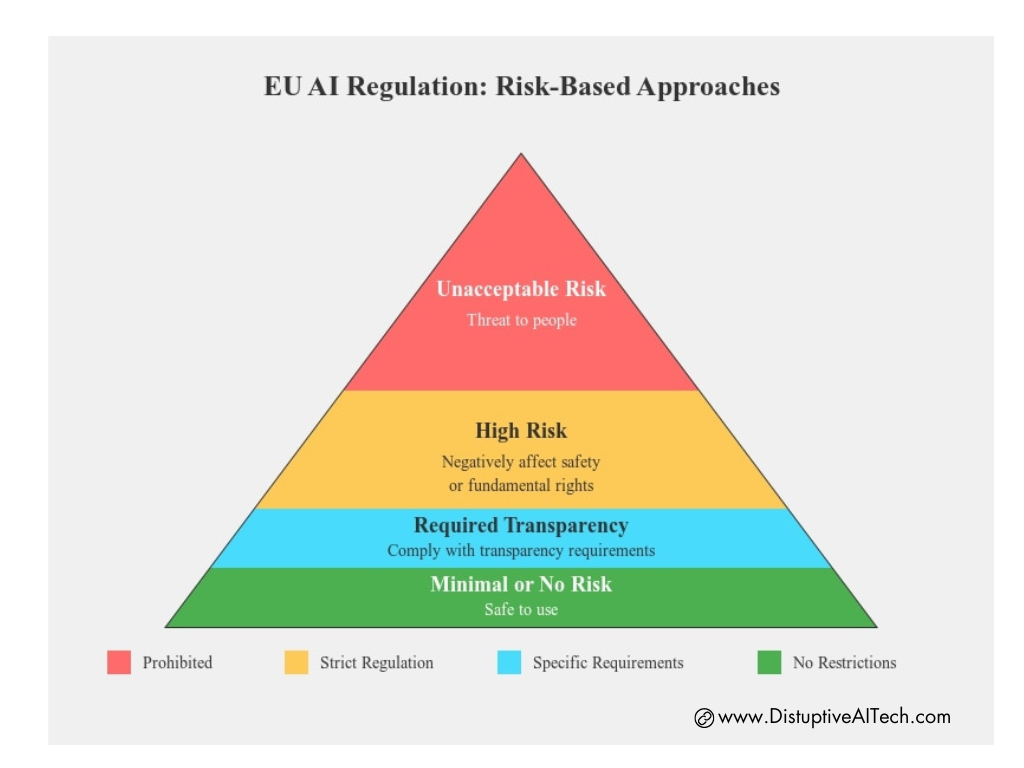

The European Commission published its proposal for a regulation on artificial intelligence and keep this updated (click here). The regulation follows a risk-based approach, differentiating between uses of AI that create an unacceptable risk, a high risk and low or minimal risk. Whether a system is classified as high risk or medium risk depends on the intended purpose of the system and on the severity of the possible harm and the probability of its occurrence.

- Unacceptable risk: Unacceptable risk AI systems are systems considered a threat to people and will be banned. It includes; Cognitive behavioural manipulation of people or specific vulnerable groups: for example, voice-activated toys that encourage dangerous behaviour in children, social scoring, biometric identification and real-time and remote biometric identification systems like facial recognition

- High Risk: AI systems that negatively affect safety or fundamental rights will be considered high risk. All high-risk AI systems will be assessed before being put in the market and also throughout their lifecycle. People will have the right to file complaints about AI systems to designated national authorities.

- Required Transparency: Generative AI, like ChatGPT, will not be classified as high-risk, but will have to comply with transparency requirements and EU copyright law

- Minimal or no-risk: These systems are permitted with no restriction

In summary we can say that, principles are necessary, but not sufficient. The hard work really begins when you endeavour to turn principles into practice. And finally, putting principles into practice takes a combination. The building blocks are tools and techniques, processes and practices, governance and stakeholder engagement, together with policy, standards and regulatory mechanisms.

ReferencesLectures from Dr Rohini Srivathsa (CTO, Microsoft India)

https://www.ibm.com/topics/responsible-ai

https://iapp.org/news/a/privacy-and-responsible-ai/

https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

One thought on “Implementing Responsible AI: A Practical Guide from Principles to Real-World Application”

Comments are closed.